Member-only story

Mistral Instruct 7B Finetuning on MedMCQA Dataset

Finetuning Mistral Instruct 7B on Google Colab using QLoRA

MistralAI’s Mistral Instruct 7B is one of the most popular open-source Large Language Models (LLMs). It has achieved SOTA performance on many benchmarks as compared to its 7B counterparts. In this post, I’ll mention the steps required to build an LLM which can solve medical entrance exam questions. We’ll be finetuning Mistral Instruct 7B on the MedMCQA dataset and providing the comparison between the original baseline model and the finetuned model.

MedMCQA is a large-scale, Multiple-Choice Question Answering (MCQA) dataset designed to address real world medical entrance exam questions. It has more than 194k high-quality and diverse AIIMS & NEET PG entrance exam MCQs covering around 2.4k healthcare topics and 21 medical subjects. More information about the dataset is available here — medmcqa/medmcqa: A large-scale (194k), Multiple-Choice Question Answering (MCQA) dataset designed to address real world medical entrance exam questions. (github.com).

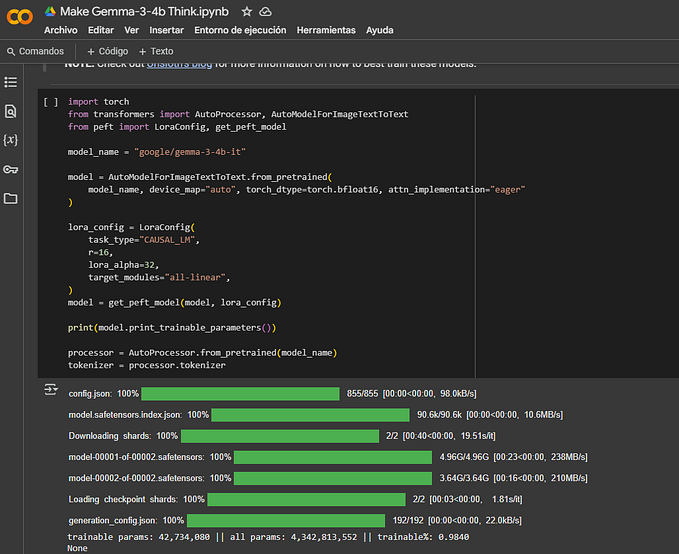

Due to GPU and memory constraints on Google Colab, we’ll use GPTQ (post-training quantized) version of Mistral Instruct 7B from HuggingFace. TheBloke has quantized Mistral Instruct 7B with GPTQ on the HuggingFace Hub. We will then use the parameter efficient LoRA technique to finetune Mistral 7B. This will keep the memory consumption under check.

If you are unaware of Mistral 7B or any of these terms like GPTQ or LoRA, I suggest you go through the following article —

You can refer this article for in-depth understanding of concepts in LLMs. It contains a curated list of some of the important papers and quality articles published online on LLMs.

Let’s first install the following libraries.

!pip install -q accelerate peft bitsandbytes

!pip install -q git+https://github.com/huggingface/transformers

!pip install -q trl py7zr auto-gptq optimumfrom google.colab import…